Designing trustworthy AI for healthcare products.

AI is entering one of the most human domains: healthcare. It helps people track sleep, manage chronic conditions, monitor mental health, and navigate loneliness. It listens, advises, and sometimes comforts. Yet despite these advances, hesitation remains, not because the algorithms are weak, but because the experience does not always feel reliable.

In this context, trust is not just an emotional response. It is about system reliability, the confidence that an AI assistant will behave predictably, communicate clearly, and acknowledge uncertainty responsibly. In healthcare, that reliability is not optional. Even when AI performs well, people still hesitate. They ask: Can I rely on this? Does it really understand me? What happens if it’s wrong?

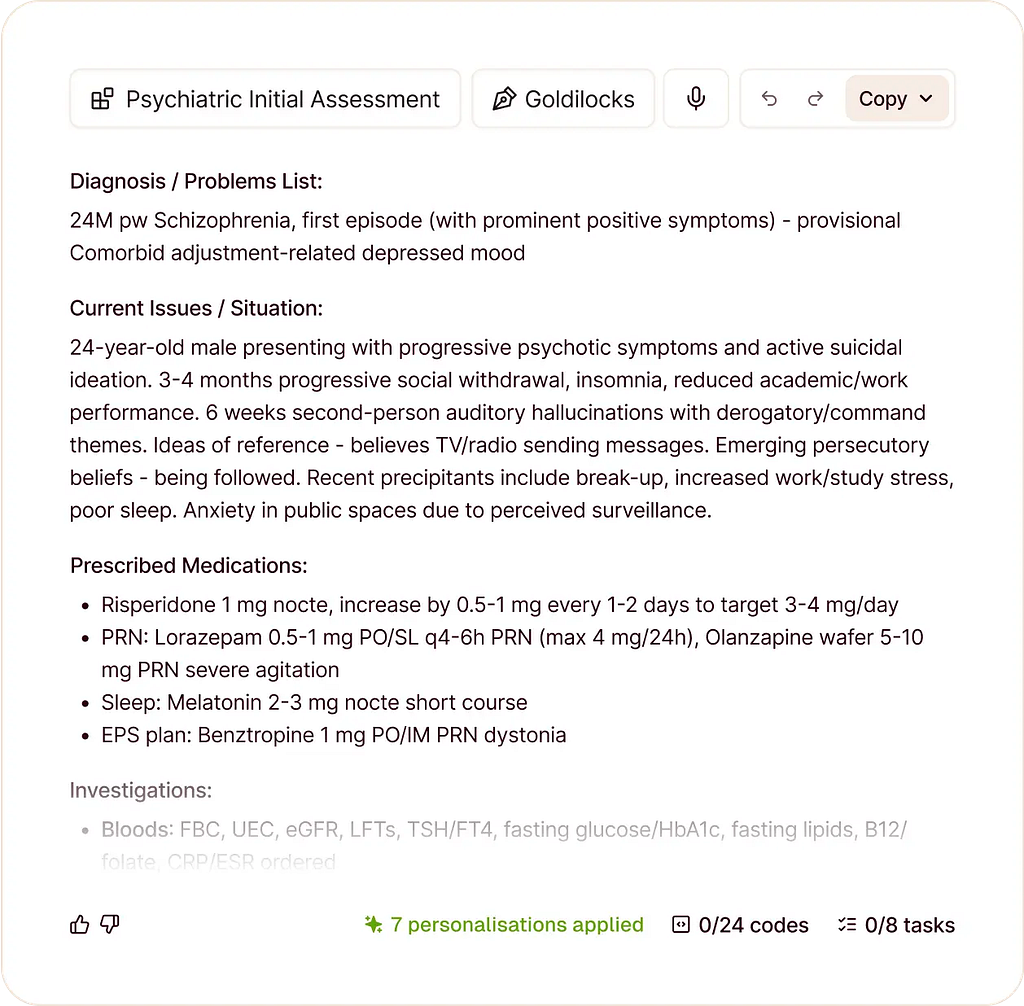

Patients will not act on guidance they do not trust, and clinicians will not adopt tools they cannot interpret. Yet most discussions about healthcare AI focus on accuracy, regulation, or data quality. The lived experience of reliability, the part users actually engage with, often remains underdesigned.

Trust is not built by algorithms alone. It is shaped by clarity, empathy, and predictable behavior. Micro-decisions matter, how an AI care assistant expresses uncertainty, the tone it uses when discussing symptoms, and how transparently it explains its limits. These design choices determine whether people rely on a system when it matters most.

For healthcare designers, the role extends beyond usability. We design for psychological safety, ensuring technology does not confuse, mislead, or cause harm. Roxane Leitão (2024) describes this shift as UX moving from interface design to risk mediation in AI-driven healthcare.

Trust is a design problem, not an AI problem

Conversations about trust in AI often drift toward technical questions: How accurate is the model? How clean is the data? How fair is the output?

These questions matter, but they miss a core truth: users do not experience algorithms. They experience interfaces. Jakob Nielsen’s observation that AI is the first new UI paradigm in 60 years underscores this shift: intelligence is now expressed through interaction, not screens alone. People engage with tone, timing, and transparency. They form mental and emotional models of a system based on how it behaves under uncertainty.

Take AI-powered symptom checkers. Many rely on similar clinical models and datasets, yet users trust some far more than others. The difference is rarely accuracy alone. It lies in how uncertainty is framed, when human escalation occurs, and whether the system explains its reasoning.

When a chatbot says, “Based on what you shared, this could be consistent with a mild infection,” versus “You might have an infection and should seek care immediately,” the underlying model may be identical. The experience is not. One response supports informed judgment; the other triggers anxiety. That shift affects behavior, follow-up, and long-term trust.

The emotional layer of accuracy

Healthcare AI is becoming more precise, but precision alone does not drive trust. What matters is whether people feel they can rely on the system in practice.

In its reporting on AI and chest X-ray diagnosis, The New York Times described a recurring pattern: AI systems performed well in benchmarks, yet experienced radiologists made better decisions in real clinical settings. The story emphasized that the problem was not technical failure, but what happens when AI moves from controlled environments into everyday medical practice. Radiologists explained that they interpret images in context, weighing patient history, ambiguity, and uncertainty. By contrast, the AI systems featured in the article could detect patterns but struggled to explain why a finding mattered or how confident it was. Clinicians did not reject AI; they stressed that it worked best as an assistant, not an authority. When conclusions arrived without context or visible uncertainty, hesitation followed, not because the AI was wrong, but because it was difficult to rely on safely.

Interfaces that feel inconsistent, cold, or evasive undermine confidence, even when the model is strong. Conversely, systems that communicate clearly, behave consistently, and acknowledge uncertainty support reliable decision-making, even when answers are imperfect.

As AI becomes part of daily health routines, designers play a key role in:

- Turning complex care flow into clear signals users can trust and act on

- Deciding when the system should sound confident, cautious, or humble

- Communicating risk and uncertainty without creating unnecessary fear

Designing the micro-interactions of reliability

Reliance is built through micro-interactions:

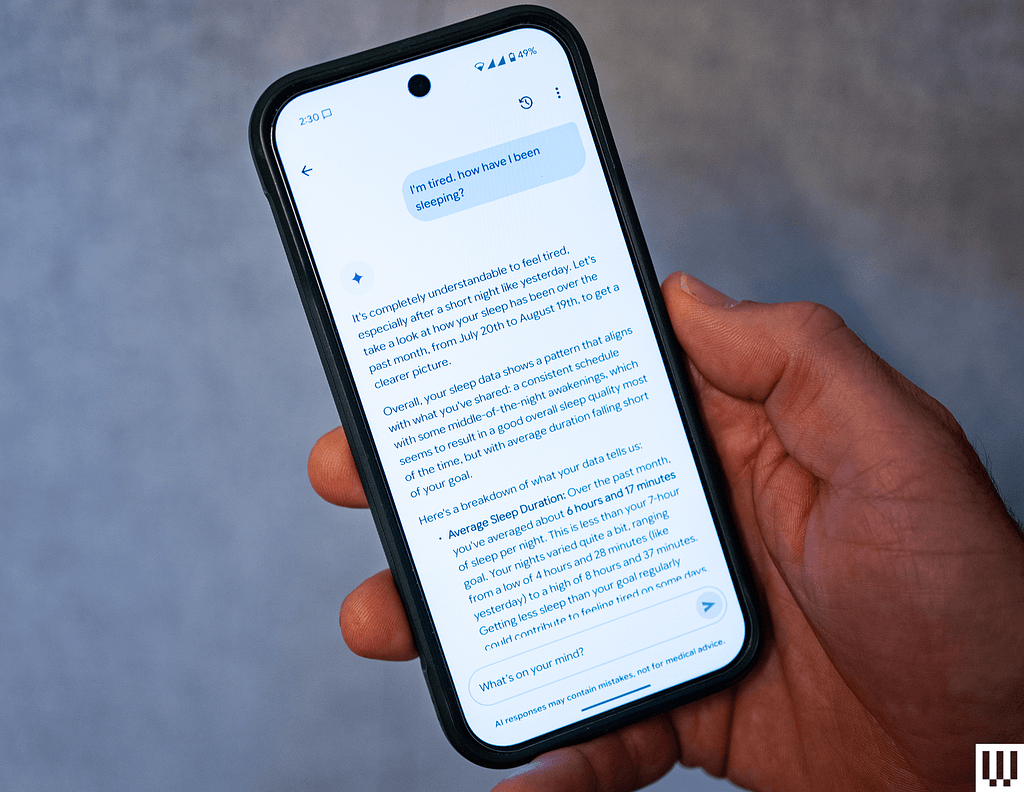

- How the AI care assistant responds to “I’m tired”

- Whether it remembers prior concerns or treats every interaction as new

- How it communicates limits or inability to help

- Whether it sets realistic expectations instead of overpromising

These signals accumulate, creating either a sense of dependable consistency, or its absence.

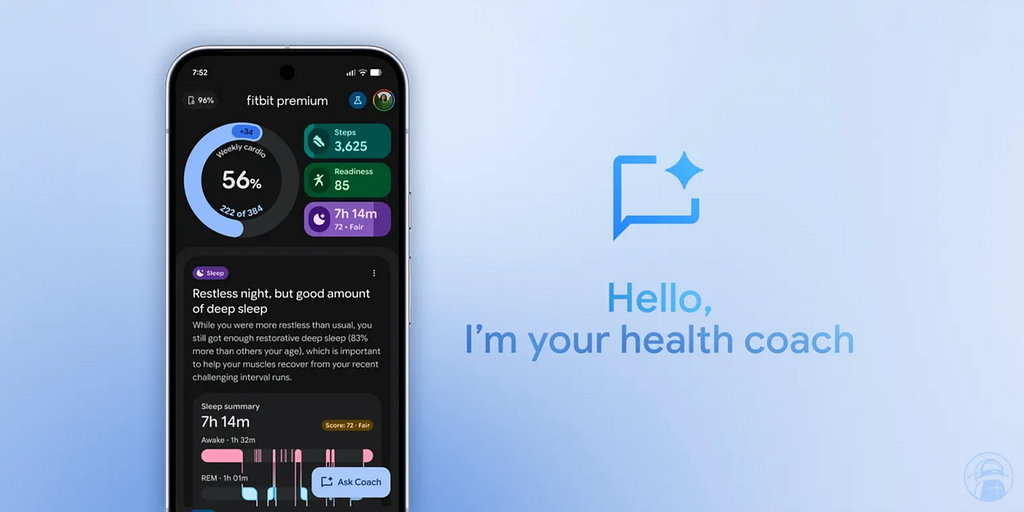

Fitbit’s AI Coach offers a useful example. As Julian Chokkattu described in Wired (2025), Fitbit’s shift toward an AI-powered health companion focuses less on prediction and more on explanation. It does not diagnose or claim medical authority, yet millions rely on it daily for sleep insights, recovery guidance, and activity suggestions. Its credibility comes from consistency, data grounding, and clear boundaries. By explaining why it makes suggestions, for example, “Here’s a breakdown of what your data tells us…” and framing guidance as optional rather than prescriptive, the system informs rather than instructs. Credibility, here, is designed, not declared.

Oura © provides another example. Its AI-generated readiness and sleep insights frame daily scores as directional signals, grounded in trends across sleep, heart rate variability, and recovery data. The interface consistently explains why a score changes and emphasizes context, reminding users that readiness fluctuates and should inform decisions, not dictate them. By avoiding absolutes and making uncertainty visible, Oura © supports sustainable, informed behavior. Trust emerges from predictable, transparent guidance, not precision alone.

The design paradox of healthcare AI

Healthcare AI operates in a space that is both deeply human and highly regulated. Users expect empathy, yet systems must remain factual. They want guidance, but AI cannot diagnose. They seek simplicity, but transparency introduces complexity.

Balancing these tensions is where UX becomes strategy. Design is no longer about polish or delight. It defines the relationship between humans and technology in moments of vulnerability. As G & Co. (2025) describe, healthcare UX increasingly functions as care choreography, aligning emotional, informational, and clinical layers. Trust in healthcare AI is not a technical output, it is a design outcome.

Designing for trust: three core principles

Progressive transparency

The AI care assistant explains its actions without overwhelming users. It communicates what it knows, what it does not, and how it reached its conclusions.

For example, instead of saying, “This application does not provide medical advice,” the system might say, “I’m not a doctor, but I can help you understand your symptoms and decide whether to speak with a healthcare professional.” Making uncertainty visible, such as indicating “70% confident,” while offering next steps reassures users and preserves agency.

Calibrated empathy

The AI care assistant adjusts its tone to the user’s context, acknowledging emotion and uncertainty without amplifying anxiety. AI mental-health products like Wysa © and Woebot © exemplify this approach, guiding users toward coping strategies or human support without emotive excess.

If a user says, “I’m feeling anxious” a helpful response might be, “Here are a few techniques that may help”, or “I can help you find professional support” rather than a generic emotional reaction like, “I’m sorry you’re feeling that way ❤️.”

Active listening cues such as “It sounds like…”, avoiding overly friendly avatars, and maintaining emotional consistency make empathy functional: it recognizes feelings while guiding toward clear next steps.

Consistent reliability

The AI care assistant behaves predictably across interactions, so users know what to expect. Consistency appears in voice, style, context awareness, privacy considerations, and responsiveness. Making the system’s actions visible reduces confusion from sudden silences or unexpected behavior. Holistic consistency also limits AI hallucinations (npj Digital Medicine, 2024) and prevents misleading outputs. Predictable systems reduce cognitive load, letting users focus on health rather than interface interpretation.

Hinge Health illustrates these principles in practice. Its AI-driven programs integrate clinician oversight to maintain progressive transparency, guide interactions with calibrated empathy, and deliver consistent, predictable feedback. This approach reinforces trust, human judgment, and patient agency simultaneously.

DesignOps and collaborative design

In healthcare, a good user experience must also be safe and accountable. DesignOps plays a critical role; key design decisions, ethical, clinical, and psychological, should be documented and defensible. Alignment across UX, product, data, and clinical teams creates shared accountability.

High-performing teams involve clinical co-design early, run cross-disciplinary reviews, and treat user research as validation, measuring trust as a real outcome. When trust becomes a shared goal, the product shifts from tool to companion.

Measuring the UX of trust

When users interact with AI care assistants, clicks and retention are not enough. Emotional insight matters. Beyond engagement metrics, teams increasingly track:

- Perceived reliability: Does this assistant give credible answers?

- Transparency recall: Do users understand its limits?

- Emotional safety: Did the experience reassure or confuse?

These measures reflect a growing shift toward trust as a design KPI, supported by qualitative research and sentiment analysis.

Design as a layer of safety

Safety in healthcare AI is not only technical or clinical; it is experiential.

- Clear disclaimers reduce misuse

- Timely reassurance reduces anxiety

- Predictable care flows reduce cognitive load

The design system, therefore, is not just aesthetic, it’s behavioral infrastructure. It shapes how people feel in moments that matter. That’s a responsibility, not just a craft. In healthcare, good design can literally make people feel safer, and act safer.

Human-in-the-loop as a design choice

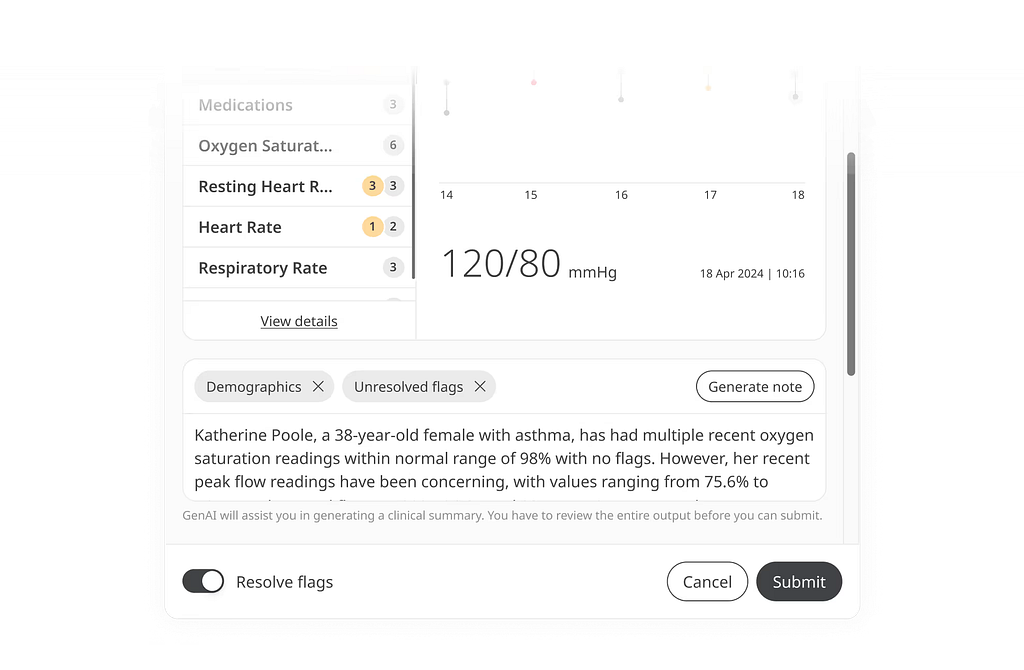

Visible human oversight is a strong trust signal. On Huma Therapeutics ©’s platform, generative AI synthesizes data and drafts insights, but clinicians review every output. AI supports care without replacing accountability. Making human oversight visible transforms AI from an invisible authority into a transparent assistant. Trust becomes tangible because responsibility remains clear.

What’s powerful about this example isn’t the brand or the technology itself; it’s the design choice to make human oversight visible, intentional, and reassuring. That’s how trust moves from being an abstract value to something tangible in the experience.

Closing thought

Designing AI for healthcare means designing for trust, vulnerability, and agency, simultaneously. Trust is not abstract. It can be designed, documented, measured, and protected. The goal is not to make machines appear human, but to make technology worthy of human reliance. In digital health, trust is not a luxury; it is a prerequisite.

When we build a health AI assistant or companion, we’re inviting vulnerability. People share their symptoms, their fears, their habits. They’re stepping into a space where mistakes, misunderstandings or miscommunications really matter. Our responsibility as designers is to make that exchange safe, by showing limits, guiding with care, and behaving consistently enough that users know what to expect.

Ultimately, the next frontier is agency: empowering people with control, not just information.

I hope this article sparks new ways of thinking about design in AI healthcare. If you’re interested in going deeper, here are some resources to explore:

- Marcos Alfonso, Anibal M. Astobiza, Ramón Ortega, AI-mediated healthcare and trust. A trust-construct and trust-factor framework for empirical research, 2025.

- Verena Seibert-Giller for UX Magazine — ‘The Psychology of Trust in AI: Why ‘Relying on AI’ Matters More than ‘Trusting It’; 16 oct. 2025

- Kaustubh Shaw — ‘Human‑Centered Design and Explainable AI: Building Trust in Clinical AI Systems’; Oct. 2025.

- G & Co., Healthcare UX Design: Transforming Patient Journeys for Better Care, 2025

- Roxane Leitão, The role of UX in AI-driven healthcare, Designing for the future, Medium, Sep 29, 2024

- The New York Times, Radiologists outperform AI in diagnosing lung disease from x-rays

- Davy van de Sande, Eline Fung Fen Chung, Jacobien Oosterhoff , Jasper van Bommel , Diederik Gommers, Michel E van Genderen, « To warrant clinical adoption AI models require a multi-faceted implementation evaluation », npj Digital Medicine volume 7, Article n°58 (2024)

- Retno Larasati and Anna DeLiddo, Building a Trustworthy Explainable AI in Healthcare, 2020. Chapter from: Human Computer Interaction and Emerging Technologies

- Jakob Nielsen, AI Is First New UI Paradigm in 60 Years, May 19, 2023

- Huma Therapeutics © Huma collaborates with Google Cloud to improve healthcare through generative AI, 2023

- Julian Chokkattu for Wired, The Fitbit App Is Turning Into an AI-Powered Personal Health Coach, Aug 20, 2025

- Real Najjar, Redefining Radiology: A Review of Artificial Intelligence Integration in Medical Imaging, 2023

- Asgari, E., Montaña-Brown, N., Dubois, M. et al. A framework to assess clinical safety and hallucination rates of LLMs for medical text summarisation. npj Digit. Med. 8, 274 (2025). https://doi.org/10.1038/s41746-025-01670-7

Reliability by design was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.